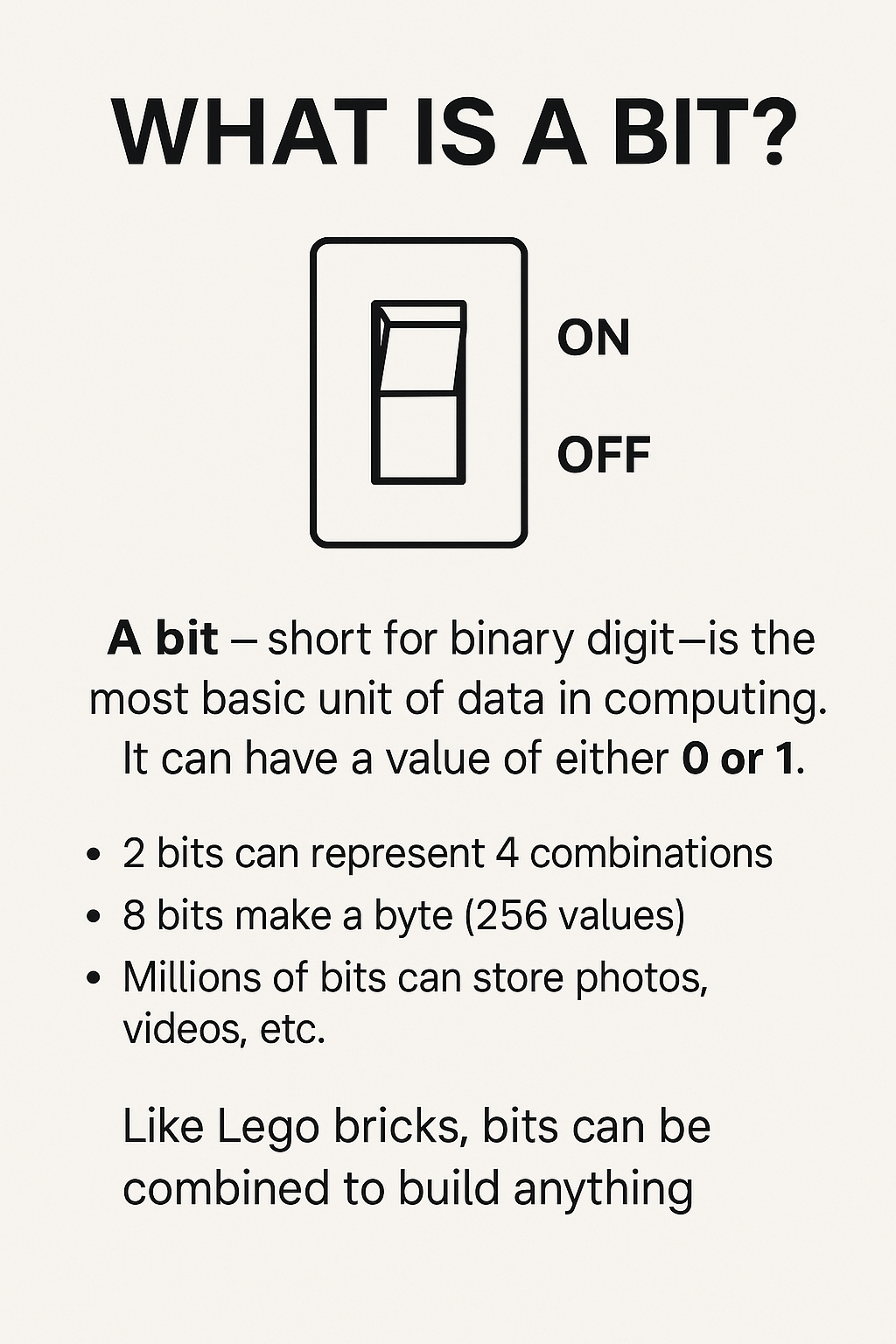

Imagine you’re at a light switch. Flip it up—it’s on. Flip it down—it’s off. That simple “on” or “off” is exactly how computers talk. And the smallest piece of that language? It’s called a bit.

A bit—short for binary digit—is the most basic unit of data in computing. It can only have one of two values: 0 or 1. Think of it like a tiny yes-or-no question: 1 means “yes”, 0 means “no”. That’s it.

Now, while a single bit doesn’t seem like much, when you start putting them together, magic happens. For example:

- 2 bits can represent 4 different combinations (00, 01, 10, 11).

- 8 bits make a byte, which can represent 256 different values (that’s how a letter like “A” or a number gets stored).

- Millions and billions of bits are used to build your photos, videos, games, and everything else on your phone or computer.

Think of bits like LEGO bricks. A single brick is simple, but stack enough of them in the right way, and you can build anything—from a castle to a spaceship. Similarly, every app, website, or file you’ve ever seen is just a complex arrangement of 0s and 1s.

Why is this important? Because understanding bits is like learning the alphabet of computers. Once you know how it starts, the whole digital world begins to make sense.

And as technology evolves—with faster internet, smarter AI, and quantum computing—bits will remain the foundation. Want to go deeper? Next, explore how bytes, kilobytes, and beyond build our digital universe.

Leave a comment