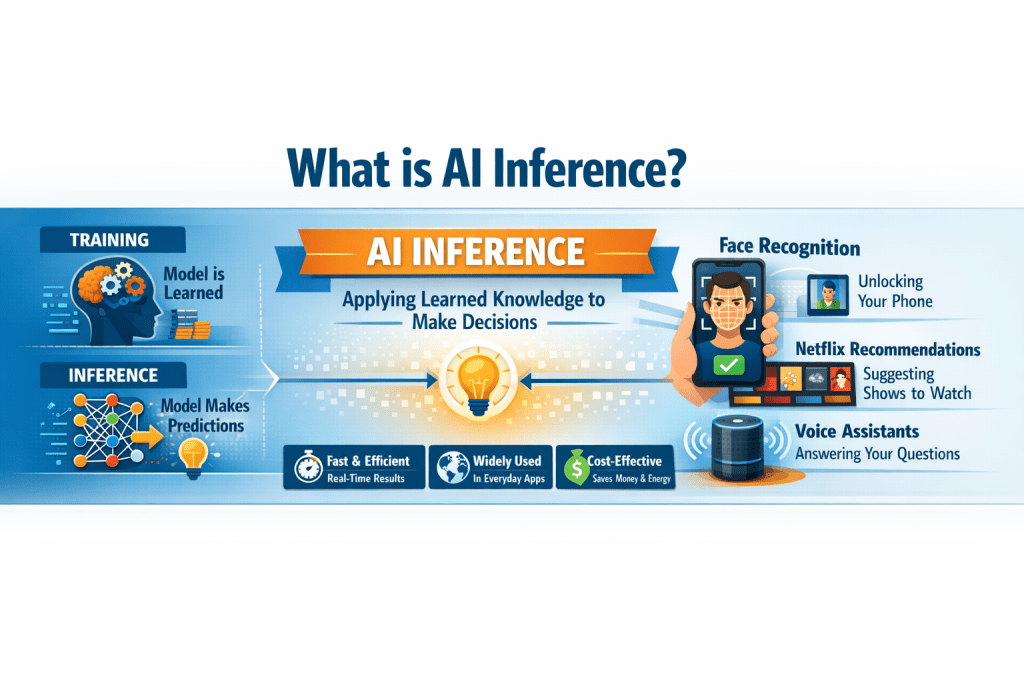

Artificial Intelligence (AI) often sounds complicated, but at its core, it works in two main phases: training and inference. While training gets most of the attention, AI inference is the part you actually interact with every day.

Simply put, AI inference is when a trained AI model uses what it has learned to make predictions or decisions on new data. It’s the moment when AI goes from learning to doing.

Think of it like studying for an exam. Training is the studying part. Inference is when you sit for the exam and answer the questions using what you already learned.

How AI Inference Works in Real Life

Let’s look at some everyday examples.

When you unlock your phone using face recognition, the AI is not learning your face again. Instead, it compares your face to what it already knows and decides, “Yes, this matches.” That decision-making step is inference.

Another example is Netflix recommendations. The model was trained earlier on viewing habits of millions of users. When you open Netflix and see suggested shows, inference is happening in real time to predict what you might like next.

Voice assistants like Siri or Alexa also rely heavily on inference. When you ask a question, the AI instantly processes your voice and gives a response using a trained model.

Why AI Inference Is So Important

AI inference is important because it’s where AI delivers value. Without inference, AI would just be a trained brain sitting idle.

Inference also needs to be fast and efficient. For example, self-driving cars must make split-second decisions. Any delay in inference could be dangerous. That’s why companies work hard to optimize inference to run on phones, laptops, and edge devices, not just powerful servers.

Another key point is cost. Training an AI model is expensive and happens less often. Inference happens millions of times a day, so making it efficient saves money and energy.

AI Inference vs AI Training

Training happens first and usually takes a long time. It involves large datasets and powerful hardware. Inference happens afterward and can run anywhere—from cloud servers to smartwatches.

You can think of training as building the brain, while inference is using the brain.

Key Takeaways

AI inference is the stage where trained AI models make predictions or decisions

It powers everyday tools like recommendations, voice assistants, and face unlock

Inference must be fast, accurate, and cost-efficient

Training teaches the model, inference applies that knowledge

Forward Looking Insights

As AI continues to spread into healthcare, finance, and education, AI inference will become even more critical. We’ll see more inference happening directly on devices, making AI faster, more private, and more accessible to everyone.

If you enjoy learning about AI in simple terms, explore my e-books by Shafaat Ali on Apple Books and deepen your understanding step by step.

👉 Shafaat Ali on Apple Books

Leave a comment